Meta Introduces Llama 3.2,

a Commercially Available LLM for Free Use

2024-09-27

目次

1. Executive Summary

Llama 3.2 is the latest large language model (LLM) developed by Meta, and it marks the first appearance of a multimodal model with image processing capabilities. This innovation allows it to integrate both text and images, enabling the execution of more advanced tasks. Additionally, the development of a lightweight model makes it capable of delivering high performance even in constrained environments like smartphones and edge devices. This article provides an in-depth analysis of the technical background, current usage, and future outlook of Llama 3.2.

2. Background and Current Analysis

In recent years, artificial intelligence (AI) technology has rapidly advanced, and large language models have been widely adopted across various fields. While traditional LLMs primarily focused on text processing, there is a growing interest in multimodal approaches that integrate text and images as the needs of businesses and researchers become more diverse. Llama 3.2 meets this trend by surpassing previous models in the following ways:

Multimodal Capability:

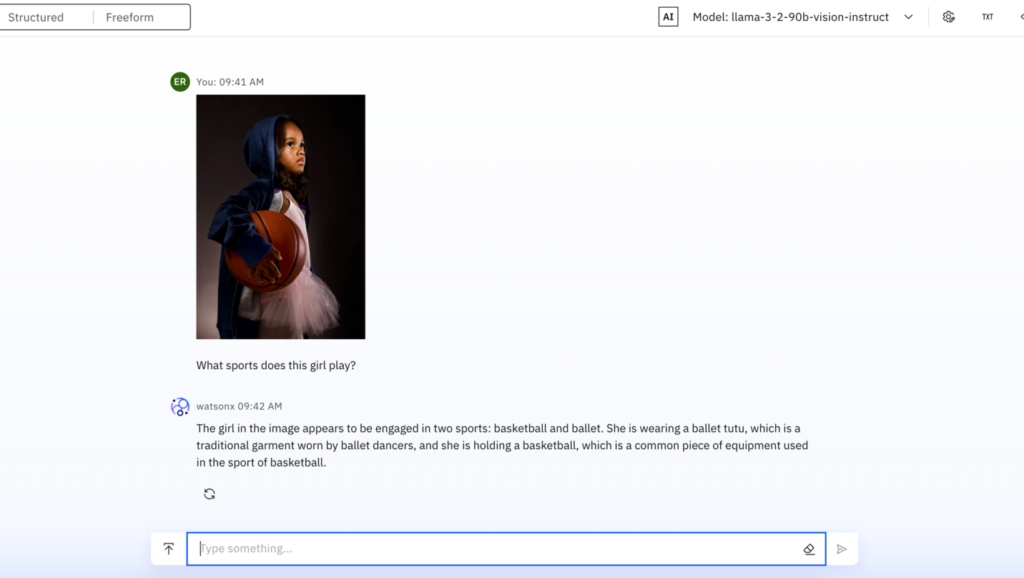

Llama 3.2 is the first model to integrate image recognition and text generation. It uses an adapter layer to combine an image encoder with the language model, enabling high-precision processing while maintaining consistency between images and text.

Model Lightness and Adaptability:

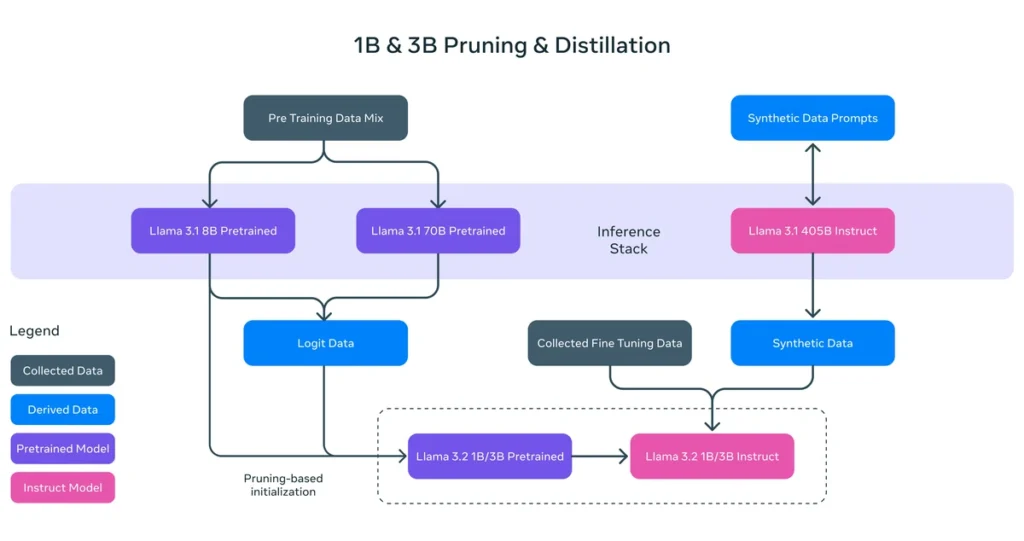

The lightweight 1B and 3B parameter models use a combination of knowledge distillation and pruning to deliver high performance with fewer computational resources. This enables local use on smartphones and edge devices.

Open-Source Strategy:

Llama 3.2 is available as open-source, allowing developers and businesses to download and run it locally, as well as fine-tune it for specific use cases. This makes it an accessible option for companies concerned about data privacy.

3. Main Content

3.1 Technical Innovations of Llama 3.2

Llama 3.2 outperforms previous models through the following technical improvements.

Integrated Processing of Images and Text:

Llama 3.2 integrates an image encoder into the language model, allowing it to simultaneously understand and process both images and text. It performs exceptionally well in tasks such as interpreting charts, diagrams, and scientific illustrations.

図1: Llama 3.2による画像の理解

Development of Lightweight Models:

The 1B and 3B models use pruning techniques to drastically reduce memory usage, making them suitable for mobile devices and contributing to enhanced privacy and security protection.

Figure 2: Overview of Pruning and Knowledge Distillation

Development of Lightweight Models:

The 1B and 3B models successfully reduced memory usage by leveraging pruning techniques (see Table 1). As a result, these models can now be utilized on mobile devices, contributing to enhanced user privacy and security.

3.2 Real-World Applications

Data Analysis and Classification:

Llama 3.2 is highly effective in tasks that require integrating images and text to extract and organize information, such as analyzing receipt data or classifying product information. This is expected to be useful in industries like finance, healthcare, and retail.

Building AI Agents:

Leveraging Llama 3.2's multimodal capabilities, it is possible to build AI agents that can autonomously perform tasks such as web browsing or product searches.

4. Future Outlook and Strategic Insights

Integration of Diverse Data Modalities:

The next evolution of Llama 3.2 is expected to incorporate additional data modalities, such as voice and sensor data, as well as applications in specialized domains like finance and medical imaging. This will enable the development of more functional AI systems, improving efficiency and fostering new business models.

Enhanced Privacy and Security:

With increasing concerns about data privacy, there is growing demand for lightweight models that can run locally. Llama 3.2 enables offline data processing, reducing security risks.

Development of Industry-Specific Models:

In the future, specialized versions of Llama, optimized for industries like healthcare, law, and manufacturing, are expected to emerge. These industry-specific models will offer tailored solutions to meet precise needs, supporting more accurate business operations.

5. Conclusion and Key Takeaways

Llama 3.2 is an innovative multimodal AI model that surpasses previous large language models, particularly in its ability to integrate and understand both images and text. The development of lightweight models has significantly improved its usability across a wider range of devices. As the integration of various data modalities progresses, Llama 3.2 holds the potential to revolutionize industries and is expected to have broad applications in business and research fields.

キーテイクアウェイ:

・Llama 3.2 supports multimodal processing, allowing it to handle text and images simultaneously.

・Its lightweight variants enable usage on mobile and edge devices.

・It offers advantages in data privacy and security, and the development of industry-specific models is a key growth area.

Source

・OpenLM.ai社「Llama 3.2: Integrating Vision with Language Models」

・WIRED「Meta Releases Llama 3.2—and Gives Its AI a Voice」

・Geeky Gadgets「New Meta Llama 3.2 Open Source Multimodal LLM Launches」

・IBM「Meta’s Llama 3.2 models now available on watsonx, including multimodal 11B and 90B models」

・Analytics India Magazine「Meta Launches Llama 3.2, Beats All Closed Source Models on Vision」

About NITI

Our mission is to deliver cutting-edge technology swiftly and bring innovation to the real world. We tackle various real-world challenges both domestically and internationally with top-tier AI technology.

Our mission is to deliver cutting-edge technology swiftly, innovating in the real world.

We will use the latest AI technology to solve various real-world problems both in Japan and overseas.

Why Not Start with a Casual Consultation?

Why not come and have a chat with us? Talking can broaden your perspective and help you see future actions and solutions. Please feel free to contact us.

Would you like to have a casual brainstorming session with us?

Talking things through can broaden your perspective and help uncover future actions and solutions. Feel free to reach out to us anytime.

Please feel free to contact us.